Two Types of Mainstream Method for Text-to-SQL

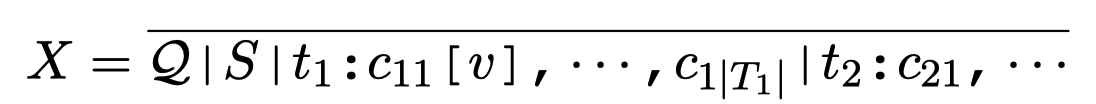

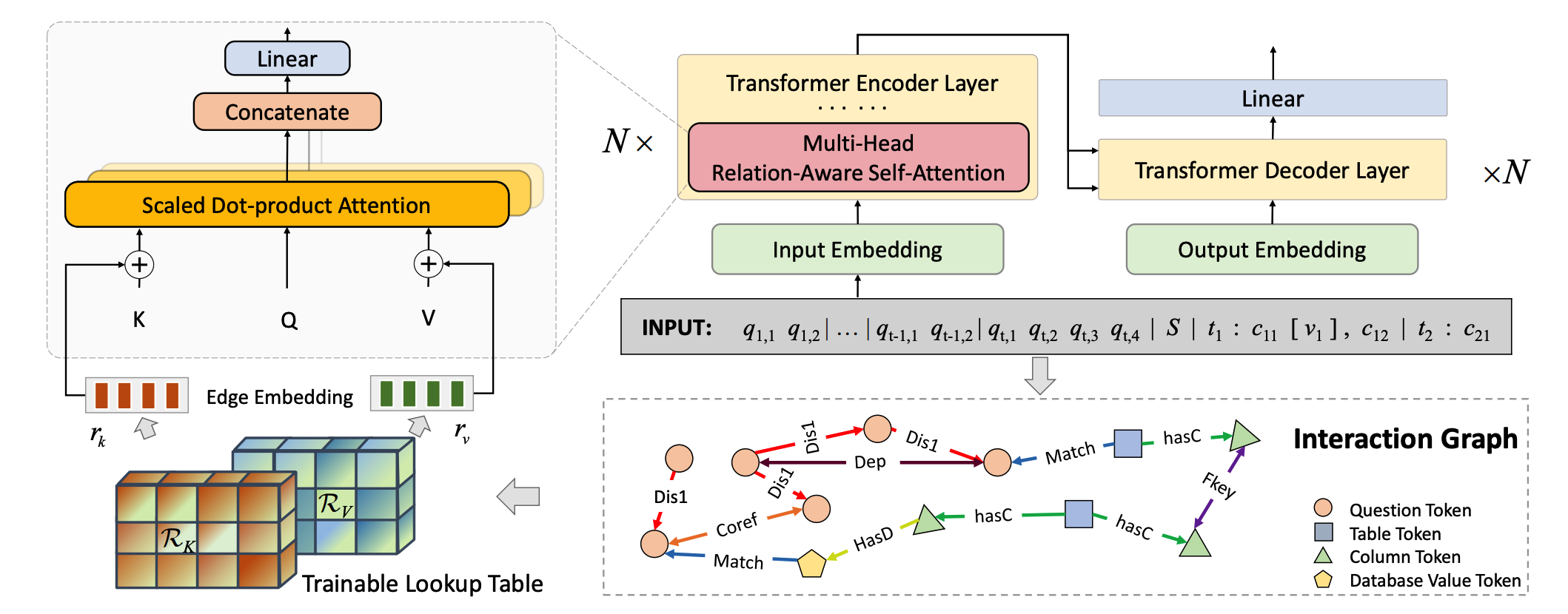

Graph Structure based method

- Using graph structures to encode various complex relationships between inputs.

- Pros:

Better representation of structural information.

- Cons:

The powerful representation capability of PLM not be fully utilized.Most AST-based decoder missing the real value.

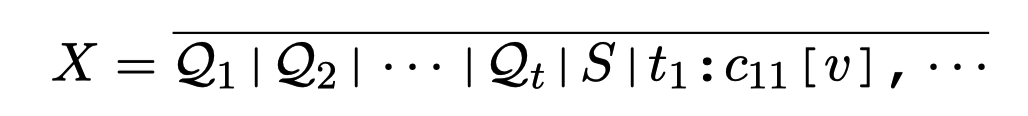

Seq2seq Method by just fine tuning PLM

- Fine-tuning a pre-trained PLM(e.g. T5-3B) could yield results competitive performance with state-of-the-art.

- Pros:

Make full use of the powerful representation capabilities of PLMThe predicted SQL query has real value

- Cons:

Simple serialization results in the loss of structural information

Can we combine the two approaches?